I presented as part of the Oxford Global Public Seminars last week—a gathering jointly hosted by the Comparative and International Education Research Group at Oxford University and the Center for Global Higher Education.

As I scanned the audience—a mix of students and scholars from around the world—I was struck by the conversation that followed.

The Q&A swiftly moved to the lived realities of technological change, with participants debating how state power and tech influence who moves where and why, the growing influence of tech leaders and companies in governance, and how climate change will act both as a driver of new migration patterns and as a fundamental reshaper of our geopolitical order.

Our conversation made clear: this not just about technological change.

We are witnessing the early stages of a fundamental reconfiguration of how agency operates across borders.

Artificial intelligence, blockchain networks, and extended reality technologies – I've come to think of these as "technologies of agency"—not just tools that simply allow traditional forms of internationalization to expand with new modalities but transformative ecosystems that will create entirely new forms of internationalization.

Eras of International Student Mobility

To understand the future, we need a quick review of the past. Recently, I analyzed mobility data and policy documents to identify eras of international student mobility.

In each era, we've had to reconsider fundamental questions:

What counts as mobility?

Who governs it?

Why does it matter?

During the Cold War Era (1945–1989) student mobility was defined by state-sponsored exchanges that acted as soft power, with organizations like UNESCO facilitating elite education and fostering influential alumni networks in newly independent nations.

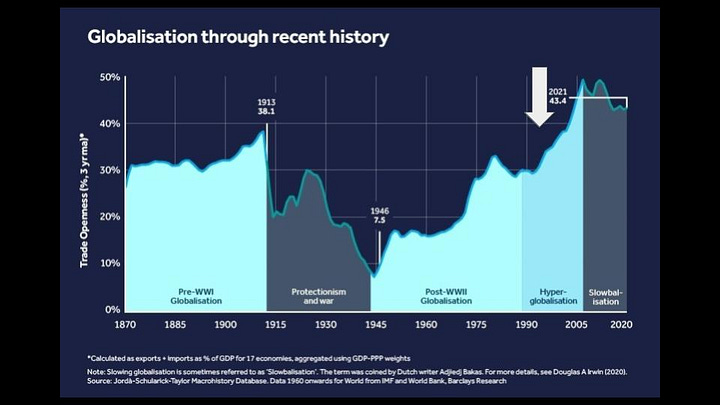

The Market Liberalization Era (1989–2008) shifted the focus to fee-paying students, propelled by programs like Erasmus and the rise of branch campuses. Universities became global recruiters, while education transformed into an export industry supported by liberalized visa policies and emerging private mobility brokers.

During the Strategic Competition Era (2008–2023), record student numbers, hybrid learning models, and tightened security—especially around STEM fields—reflected how geopolitics increasingly drove mobility as a tool for economic growth.

Now, in the Multipolar Securitization Era (2024– ), digital internationalization, AI-driven platforms, and Strategic Education Blocs are redefining mobility as strategic statecraft. This era sees a fragmented governance landscape where middle powers decentralize academic influence and digital learning transforms global engagement.

What struck me as I wrapped up the analysis is how our current definitions of mobility and agency remain anchored in outdated paradigms.

Technologies of Agency

Digital internationalization needs to bridge, not widen, gaps. But I’ve struggled with something as we enter this new era. New technologies; they're not just tools.

After months of reflection and discussions with colleagues across disciplines, I've begun to call them technologies of agency.

Technologies of agency are systems, tools, and infrastructures that do not merely facilitate human action but actively shape, mediate, and govern decision-making, mobility, and access to power.

At their core, technologies of agency do not simply expand human capability—they reconfigure who has agency in the first place, reshaping the distribution of knowledge, power, and opportunity in the digital era.

Let’s explore three technologies that are reshaping agency in international higher education:

artificial intelligence,

blockchain, and

spatial computing.

Artificial Intelligence (AI) - Distributed Intelligence

"AI" is a small, imprecise, and overused term.

Like most people, I used to think of AI as simply a tool—data, algorithms, automation—something used to be more efficient.

But I've been forced to reconsider.

AI is not just a tool; it is an ecosystem—an “environment” we inhabit, not just a “tool” we use.

AI is like electricity.

Much like electricity transformed manual labor in the Industrial Revolution, AI is transforming knowledge work in a cognitive revolution as it is increasingly woven into the fabric of daily life, work, and society.

We cannot opt out of a world shaped by electricity; we will not be able to opt out of the world being created by AI.

AI acts autonomously, sees patterns we cannot perceive, and operates at scales beyond our comprehension.

The revised OECD AI Policy Observatory (2024) recently changed its definition of artificial intelligence to capture these realities.

The key changes? The increasing autonomy of AI systems that make decisions that impact humans.

Here are the revisions to the previous definition of “AI System”, with additions set out in bold and subtractions in strikethrough):

"An AI system is a machine-based system that

can, fora given set of human-definedexplicit or implicit objectives, infers, from the input it receives, how to generate outputs such asmakespredictions, content, recommendations, or decisions that can influenceingphysicalrealor virtual environments. Different AI systemsare designed to operate withvaryingin their levels of autonomy and adaptiveness after deployment.

The edges of this new reality are already coming into view with in Google’s co-scientist framework and scientific advancements already cataloged in the most recent 2024 AI Index Report from Stanford HAI.

AI isn't just a prediction engine—it represents a "philosophical rupture." according to Tobias Rees, whose work lives at the intersection of philosophy, art and technology.

By this, Rees means a fundamental break in how we understand the relationship between humans and machines, between intelligence and its embodiment.

Here is his basic argument:

For centuries, we've regarded intelligence as uniquely human. Machines were deterministic, programmable, devoid of thought. We told them what to do. They obeyed.

Deep learning broke that paradigm.

Unlike traditional symbolic AI, where every function was explicitly coded, deep learning systems are not programmed—they learn. They identify patterns, extract meaning, and generate outputs beyond what we explicitly instruct.

They don't just follow rules; they create them.

This shifts to the fundamental question: Is AI intelligent?

It learns from experience.

It derives logic from data.

It abstracts knowledge to solve new problems.

After much consideration, the answer is clear—yes, but in ways different from us.

We're witnessing a shift from AI as a “tool” to AI as an adaptive, co-evolving system—a “new digital species”—a form of intelligence that operates in latent spaces beyond human perception. This isn't just automation; it's cognition at a global scale.

The philosophical rupture that Rees describes collapses long-standing distinctions between humans and machines:

Humans—open, evolving, intelligent beings with interiority.

Machines—closed, deterministic systems, devoid of intelligence.

That binary no longer holds.

AI has access to more information than we do. It processes data faster. It sees hidden structures in patterns we cannot perceive. “Is AI ‘smarter’ than us?” is the wrong question—the question is how diverse intelligences will work together.

Can we learn to work with systems with different, more powerful forms of intelludence than the kind we possesss?

To be clear, this is not the false binary: Is AI “smarter” than us? It’s not a race–human vs AI–it’s a new relation.

As Michael Levin highlights, humans are learning to live in a world of diverse intelligences, opening a new space for possible minds.

The realization of this new reality can induce a process of grief – a sign that you have really grappled with it. You may experience a sense of disillusionment.

But, disillusionment isn’t a loss; it’s a form of liberation—because an illusion, by definition, isn’t something valuable. It’s something false. Losing it doesn’t diminish you; it clears space for something truer, more solid, to take its place.

Blockchain - Decentralized Trust

I initially thought of blockchain through the narrow lens of meme-coins and cryptocurrencies, like when social media lampooned the Ohio State University commencement speaker who told the graduating class to buy Bitcoin.

Those laughing the loudest likely do not actually understand how blockchain works and it potential to help humanity build a better internet.

Blockchain is a new way to build networks.

It's not about cryptocurrencies—it's about creating new systems of trust.

It’s an exit out of the walled gardens of corporate networks – a powerful counterweight to the centralizing forces of big tech – we “did not pay” and became “the product” as our society became more polarized.

Chris Dixon describes blockchain as a “new construction material for building a more open internet"

It’s not about technology. It's about trust.

It’s not just about “digital gold”. It’s about self-sovereign identities.

The early internet was novel for its open protocols and decentralized structure. No one was in charge. That was the point. As centralization concentrated power in a handful of tech giants, internationalization was also increasingly shaped by corporate influence, driven by income generation and rankings

Decentralized networks are our best chance to rewild the internet.

And blockchain opens new forms of decentralized governance.

Blockchain is not primarily about cryptocurrencies—it's about creating new systems of trust and verification that enable things previously impossible.

Degrees, transcripts, and professional certifications function as proof of learning, enabling mobility across borders and industries. But the system is slow, bureaucratic, and nationally bounded.

A degree earned in one country often faces delays, skepticism, and costly verification processes before being recognized elsewhere. Refugees flee without paperwork. Lifelong learners accumulate knowledge but lack institutional validation to prove it.

Blockchain introduces a parallel layer of trust for mobility, one that is decentralized, verifiable, and tamper-proof. Credentials can be securely stored, instantly authenticated and seamlessly shared across borders—without reliance on slow-moving intermediaries.

This isn't a threat to universities–the traditional arbiters of trust and verification– unless they fail to adapt.

It isn’t a joke. It's an opportunity that enhances agency and expands mobility.

Just look at the European Blockchain Services Infrastructure project. Universities across the EU are developing shared standards for credential verification, creating pathways for students to move between institutions without the traditional friction of credit transfer and degree recognition.

Universities don't need to change what they do.

They need to change how their impact is recognized.

This is urgent work with new (and growing) forms of mobility: networked and hybrid mobility, mobility of knowledge and skills due to labor market displacements, and forced mobility due to conflict and climate change.

Spatial Computing - Hybrid Interaction

The first time I experienced spatial computing was at the Carnegie Library Apple Store in Washington, DC. I entered a simulated world where I was working on my laptop on the edge of the ocean while scrolling through my photos while watching TV.

I left the Apple Store thinking, "This is interesting, but probably just a novelty." (And I didn’t buy the headset).

Most of us imagine spatial computing as just that: something for gamers and hobbists that love immersive sports and entertainment.

But spatial computing is more than a headset.

What we call "spatial computing" today will simply be "the internet" tomorrow.

Right now, we browse the web through screens—flat, contained, two-dimensional portals. But, when the internet becomes spatial, it is no longer constrained by rectangles. We look at it; and it looks back.

As Fei Fei Li, Founding Co-Director of Stanford's Human-Centered AI Institute reminds us, with spatial intelligence, AI systems will understand the real world.

This shift is profound because cross-border learning is social, contextual, and experiential. Same with cross-border research. New worlds open up:

Students can collaborate in cross-border teams in shared virtual spaces,

Scientists can conduct experiments in digital labs.

Students can immerse themselves in Ancient Rome, not just read about it.

Language learners can practice in fully simulated cultural environments.

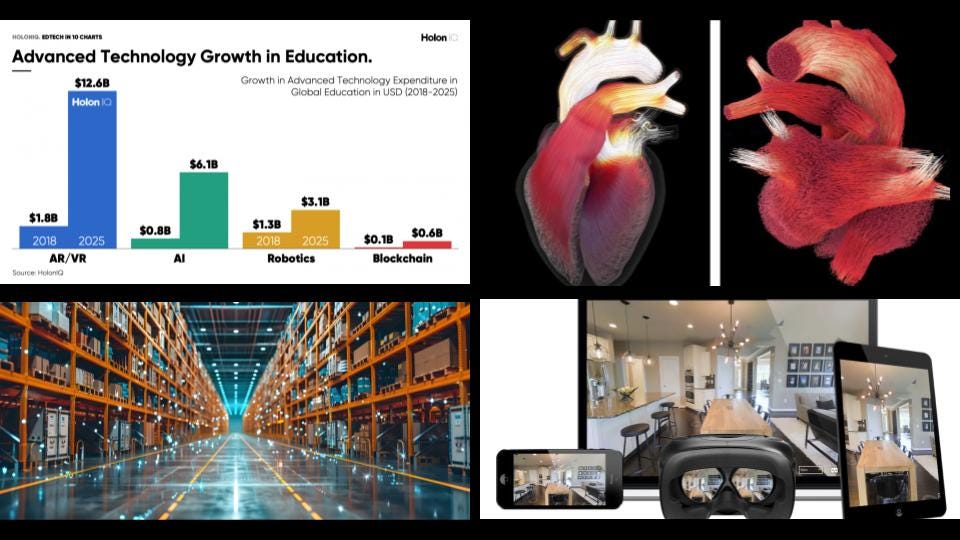

Augmented reality (AR), virtual reality (VR), and mixed reality (MR) are collapsing the distinction between digital and physical space, creating an intelligent, interactive world where presence is no longer limited by geography.

As spatial computing technologies collapse distinctions between digital and physical spaces—creating intelligent, interactive environments where presence transcends geography—we must debate the implications.

To understand the implications of the new 3D internet, we need only look at how companies like Amazon are already using it.

Digital twins let people work with virtual replicas of forests, oil fields, cities, supply chains—with uses from energy optimization to logistics to and immersive training.

Amazon's digital twins create real-time virtual replicas that integrate AI, robotics, and cloud computing, which is useful for logistics and immersive training. In healthcare, patient-specific digital twins at institutions like Johns Hopkins enable precision medicine by simulating treatment outcomes with high accuracy, reducing global healthcare costs by up to $300 billion annually. Companies like Matterport are at the forefront of this transformation, offering technology that creates immersive 3D virtual replicas of physical properties within 48 hours of capture

It’s already happening on university campuses:

Digital twins optimize energy consumption through real-time infrastructure monitoring. The Florida Digital Twin project integrates AI to simulate urban energy systems and coastal resilience. Arizona State University employs digital twins to test layout modifications, enhance classroom utilization, and create immersive learning spaces where students collaborate as avatars.

Signals + Trends

Technology waves tend to come in pairs or triples.

15 years ago, it was mobile, social, and cloud. Today, it’s AI, blockchain, and new devices—probably robotics, self-driving cars, and VR.

Each is technology transformative in its own right, but it’s the convergence of all three that will make the impact so powerful.

Technological Acceleration

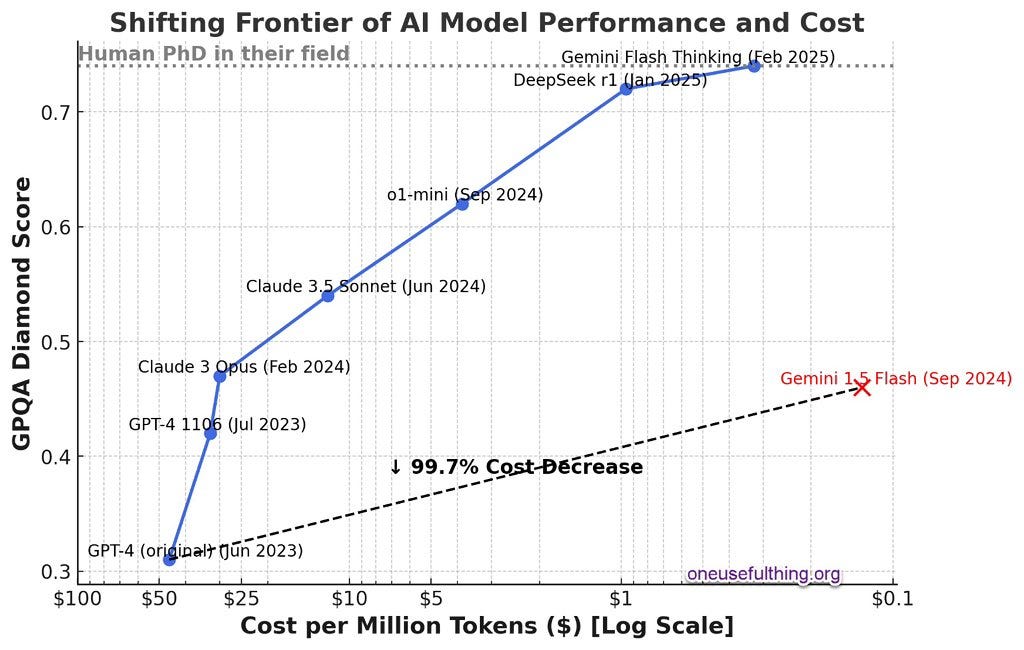

The cost of training AI models is falling faster than Moore's Law, while model performance is improving at an exponential rate. This is compounded acceleration—where leaps stack atop one another, outpace both regulation and human adaptation.

2025 will be the year of AI agents – a year where AI systems gain greater agency, decoupling agency from life and interiority.

A Statement from Dario Amodei on the Paris AI Action Summit framed the stakes in stark terms:

While AI has the potential to dramatically accelerate economic growth throughout the world, it also has the potential to be highly disruptive. A “country of geniuses in a datacenter” could represent the largest change to the global labor market in human history.

His timeline? 2026 or 2027. Almost certainly by 2030.

I find this prediction both plausible and unsettling. If Amodei is right—and I increasingly think he is—we're witnessing not just technological change but a fundamental reconfiguration of intelligence itself.

Policy Fragmentation

I've been closely following policy developments around national AI policies (with a new article coming out soon in Higher Education Quarterly), and I'm struck by how AI is advancing faster than governance can adapt.

The recent Paris AI Summit exposed what I see as the fractured geopolitics of intelligence.

This wasn't just a policy discussion—it was a power struggle over who will set the rules for AI's future.

U.S. Vice President J.D. Vance emphasized that U.S. dominance in semiconductors, software, and governance would dictate the global AI order.

The U.S. and U.K. refused to sign a global AI ethics declaration, framing AI governance as a matter of national security and competitive advantage, not multilateral cooperation.

China signed on, positioning itself as a leader in AI governance within a multipolar world order.

EC President Ursula von der Leyen announced a €200 billion AI investment, presenting Europe as an open-source alternative to U.S.-controlled AI ecosystems.

The message is unmistakable:

In the race to achieve AGI, countries are engaging in fierce competition and forging strategic alliances, all in the hope that the first breakthrough will confer a decisive geopolitical advantage and redefine global power dynamics.

And universities?

—traditionally sites of international collaboration and knowledge exchange—

they weren't at the table.

Quantum Wildcard

And, just as we’re beginning to grasp AI's trajectory, quantum computing looms—an acceleration beyond acceleration.

Last week, Microsoft announced the Majorana 1, the world's first quantum chip powered by a new Topological Core architecture, which uses what they call a "topoconductor" - a new state of matter that has only previously existed in theory. Satya Nadella said this would allow Microsoft to create a meaningful quantum computer "not in decades, as some have predicted, but in years."

And late last year, Google announced its Willow quantum chip. Willow performed a benchmark computation in under 5 minutes that would take one of today's fastest supercomputers approximately 10 septillion years, longer than the age of the universe.

Geopolitics in the Intelligence Age

The rapid advancement in technology is tied up in new forms of techno-politics.

While the world has become more multipolar, technology has consolidated under the control of a handful of tech giants and their ideological networks.

The "Silicon-DC" experiment—once a fringe idea—has become a central pillar of the Trump 2.0 coalition. Their vision of governance is something deeper, more ideological—a fusion of techno-accelerationism, market libertarianism, and statecraft.

This vision has profound implications for how universities function, particularly in their role as sites of knowledge creation and transmission.

Today, universities are geopolitical actors. Knowledge is a strategic asset.

Hans de Wit and I (2024) have argued that the new model of responsible internationalization increasingly mirrors Cold War-era restrictions—but in a multipolar world, the stakes are more complex.

The Council of the European Union's doctrine—"as open as possible, as closed as necessary"—acknowledges the tension. But the real question is:

But, who decides what is "necessary," and who gets to participate in the new order of knowledge governance?

Intelligence Divides

The implications for mobility and agency?

I’ve been thinking a lot about this.

The digital divide was never just about connection—it was about who had the agency to participate in the digital world, to create, shape, and direct knowledge rather than simply consume it.

Now, AI redraws the map entirely, creating what I've started calling an intelligence divide—a gap between individuals and states who wield AI as an extension of their agency and those who are displaced, realigned, or subtly governed by its decisions.

This shift is not just about individuals—it is about nations, institutions, and entire knowledge systems. Some will see their intelligence and agency expand, while others will be increasingly shaped by logic they neither designed nor controlled.

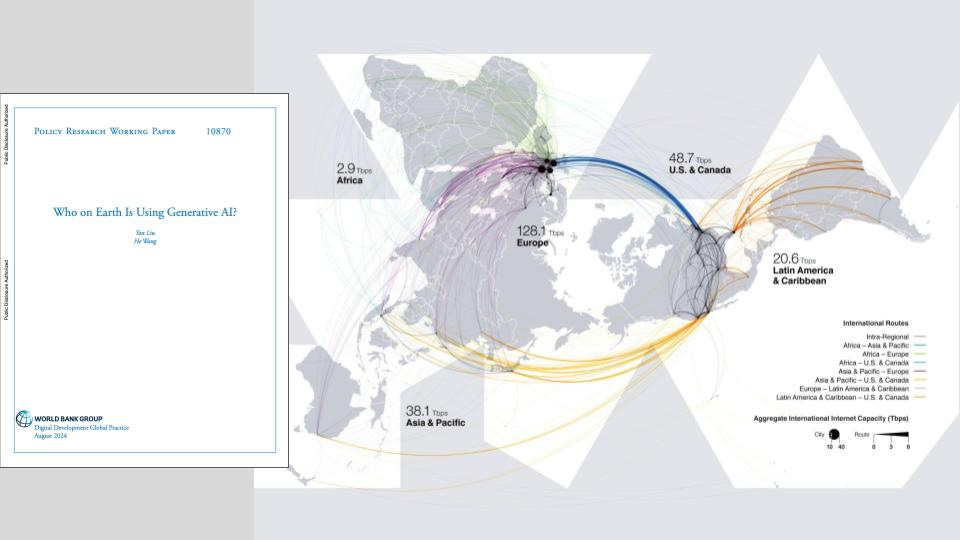

The World Bank's 2024 report, Who on Earth Is Using Generative AI?, explores the global adoption and implications of generative artificial intelligence technologies.

The good news:

Middle-income countries now drive more than 50% of global AI traffic, outpacing high-income nations in adoption relative to their GDP and infrastructure.

The tragic gap:

Low-income economies contribute less than 1% of global AI traffic, largely due to infrastructure gaps, limited English proficiency, and lower digital literacy.

Unlike the digital divide, this is no longer about access to the internet, tools, or training—it is about who retains the ability to think, decide, and create freely in an era of machine-mediated cognition.

I find myself increasingly concerned by this concentration of power, not because technology itself is dangerous, but because its governance is being shaped by such a narrow set of actors.

As Naval Ravikant shared, “I’m not scared of AI. I’m scared of what a very small number of people who control AI do to the rest of us ‘for our own good’.”

Agency in the Intelligence Age

If AI is a philosophical rupture, as Tobias Rees suggests—if it forces us to rethink intelligence, agency, and knowledge from the ground up.

The real challenge isn't to predict the future.

The challenge is to create new conceptual categories for mobility and agency that help us navigate a world that no longer follows the logic of the past.

As Ress argues, AI isn't just a tool. It's a relation.

This conceptual shift requires us to move beyond thinking of technologies as instruments and toward understanding them as partners in complex, co-evolving systems.

We need new vocabularies—to describe hybrid agency, distributed intelligence, and the shifting boundary between human and machine cognition.

When I speak with university leaders about AI policies, the conversation often stalls because our language hasn't caught up to the reality we're trying to govern.

We also need ethical guardrails—not just against bias or misinformation but against algorithmic violence, the invisible ways new “technologies of agency” systems encode and amplify structural power.

Reframing Our Questions

Let's revisit the three questions I posed in the introduction

What counts as mobility?

Who governs it?

Why does it matter?

What I've come to believe:

Student mobility is no longer just about individual choice—it's about state power.

Although student mobility has never been solely about individual choice, the free market policies of the Market Liberalization Era (1989–2008) are being replaced by a reality where national security, geopolitics, and technological sovereignty dictate who moves, where, and why.

Mobility is no longer just about students “on the move”—it's about “distributing intelligence”.

Intelligence isn't merely transported; it is instantiated, recombined, and amplified across networks, reshaping who creates knowledge and how it moves.

Agency is no longer just human autonomy—it is being redefined by hybrid intelligence.

As AI systems learn, decide, and act at scales beyond human perception, the question is no longer just who governs mobility but how agency itself is being reconstructed—distributed across human-machine networks, shaping who or what gets to act in the world.

The 'third sector' is no longer peripheral—it is a rising power in mobility.

Mobility has long been a negotiation between universities, states, and markets, but today, technology companies and non-state actors are redrawing these boundaries—at times aligning with universities, at times bypassing them entirely.

Knowledge risks becoming securitized—treated as a strategic asset rather than a shared resource.

The real imperative is ensuring that knowledge flows freely across borders, disciplines, and institutions—its benefits are widely shared, not concentrated in a few elite centers.

Talent migration is accelerating and increasingly viewed as a strategic national asset.

As AI, biotech, and quantum computing redefine economic and military power, who moves, who stays, and who is barred from access is no longer incidental—it's intentional.

What is Distributed Progress?

Universities, tech companies, and governments are redrawing the maps of mobility—expanding access in some places, tightening control in others, often doing both at once. To keep focus, we must view the future through both the lens of techno-optimism and techno-skepticism.

But let's not be naïve.

As Michel Foucault reminds us, power does not disappear—it reinvents itself.

The democratizing potential of technologies of agency exists alongside their capacity to create new forms of control and exclusion.

As Bruno LaTour reminds us, power and agency do not reside in one actor but in the network itself, where decisions emerge from complex interdependencies.

This is the core challenge of distributed progress:

How can we ensure that intelligence, opportunity, and mobility are not just optimized but humanized—so that technology serves all of humanity, not inequality masked in digital disguise?

I'm still working through these ideas, still refining the framework, still questioning my own assumptions. I imagine you are too.

Universities endure not by resisting change but by absorbing it, by maintaining their core values while adapting their forms and functions.

The Intelligence Age will demand nothing less.

Not resistance to technological change, but its conscious, deliberate integration into our highest educational values.

Not technology for technology's sake.

But technology in service of human flourishing and distributed agency.

This is the work that lies before us.

Not just to understand the new “technologies of agency”.

But to ensure they become technologies of access, opportunity, and distributed progress.