Universities are the oldest continuously operating institutions in the Western world, predating nearly all existing corporations, most government agencies, and even many nations. For centuries, they've maintained a remarkable consistency of purpose while adapting to technological and social change.

But national AI policies are now pressuring universities to make choices they've historically been able to avoid.

It is not just another technological shift like the emergence of social, mobile, and cloud computing 15 years ago.

The new report from the Association for the Advancement of Artificial Intelligence (AAAI) puts it in stark terms: AI is no longer a purely technical domain—it is a major arena for strategic competition as nations vie for economic, military, and technological dominance. Issues such as AI-driven disinformation, cybersecurity risks, and even biosecurity threats (for example, in the realm of AI-powered protein design) are contributing to a volatile mix of competition and cooperation on the world stage. The report envisions a future where AI reconfigures international power dynamics, driving nations into fierce competition while simultaneously necessitating new models of global governance.

National policies are transforming the economic function, geopolitical role, and structural incentives of universities. Universities are being repositioned within competing national and corporate AI ecosystems.

What's happening isn't merely incremental change—it's an institutional phase transition that could fragment global scientific cooperation for national advantage. This shift threatens to reposition universities as competing agents of national power, with significant consequences for internationalization.

Four Ways Universities Are Positioned: The New Geopolitics of Knowledge

The repositioning of universities isn't happening through grand design but through emergent patterns of adaptation as institutions respond to competing pressures. These patterns reveal not just how universities are changing, but why this transformation represents a shift in how knowledge is created and shared.

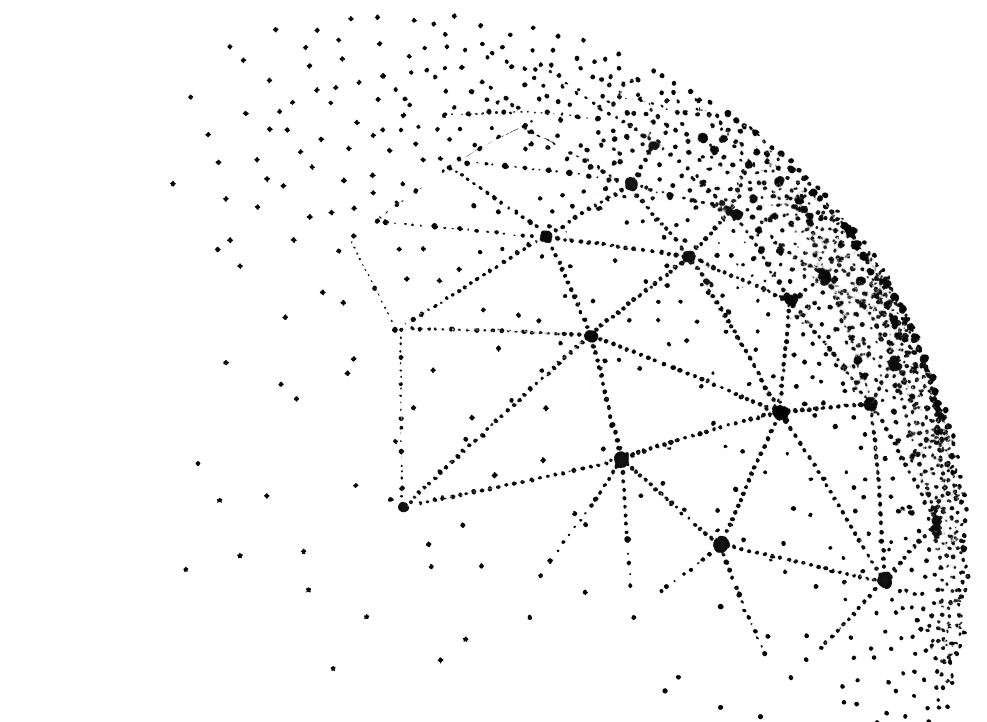

Our analysis of AI policies across eight state (US, China, EU, UK, India, Russia) and non-state (BigTech, UNESCO) actors reveals four emergent patterns in how universities are positioned within AI ecosystems. Rather than coherent strategies, these "models" reflect contested compromises between competing institutional interests, funding mechanisms, and political constraints:

Market-Accelerator Model (US/UK)

Universities as talent pipelines optimizing for innovation speed, with funding fragmented across DARPA, NSF, UKRI, private industry, and venture capital. The seeming coherence masks significant tensions between defense priorities, commercial interests, and academic autonomy.

State-Directed Model (China/Russia)

Universities as strategic assets advancing national objectives, though implementation varies dramatically between central ministries, provincial governments, and military interests. What appears coordinated often involves bureaucratic competition for resources and influence.

Regulatory-Ethical Model (EU/UNESCO)

Universities as governance partners ensuring human-aligned AI, with funding balanced between public research programs (Horizon Europe) and public-private consortia. Internal conflicts between member states with different industrial policies create implementation gaps and cause fragmentation.

Inclusive-Development Model (India)

Universities as accessibility engines democratizing AI capabilities, though ambitious policy declarations often outpace implementation capacity, with significant urban-rural and linguistic divides.

Each pattern reflects what Bernard Stiegler describes as pharmacological orientations—distinct ways of understanding both the potential benefits (remedies) and harms (poisons) of AI technologies. These orientations aren't merely philosophical positions but operational frameworks that drive resource allocation, research priorities, and international collaborations.

The American system treats AI primarily as beneficial when it creates economic value and dangerous when it threatens national security. The Chinese system sees AI as beneficial when it enhances state capacity and dangerous when it undermines social harmony. Each positions universities as part of the cure to maximize the benefits for the national strategic priorities and a way to fight the disease, whether it be the breakdown of social harmony or threats to national security.

To understand how this plays out on the ground, let's examine how one nation—India—navigates this complex terrain, balancing global aspirations with domestic constraints in its attempt to position itself within the emerging AI landscape.

India's Gambit: When Educational Aspirations Meet Implementation Reality

The raw data is striking:

In our dataset, India dominates education-specific AI policies—accounting for nearly half the mentions of education in 1,836 documents. Yet, merely counting policy provisions does not capture their true implementation or impact.

What makes India's approach interesting isn't just the volume of policies but its distinctive economic logic. Rather than competing head-on with China and the U.S. on compute infrastructure (where it would start at a massive disadvantage), India is leveraging its educational system and demographic dividend as entry points into the global AI ecosystem.

What's really happening on the ground?

Some initiatives show genuine traction:

The All India Council for Technical Education (AICTE) has designated 2025 as the "Year of AI," with plans to integrate artificial intelligence into curricula across core engineering branches as interdisciplinary modules. This initiative aims to impact approximately 40 million students across 14,000 colleges nationwide, with institutions required to submit implementation plans by December 31, 2024. AICTE has already incorporated AI elements into the newly launched Electrical Engineering undergraduate curriculum as a model for other disciplines.

Meanwhile, the Ministry of Skill Development and Entrepreneurship launched "AI for India 2.0" in July 2023, a free online AI training program developed in collaboration with Skill India and GUVI, an IIT Madras incubated startup. This NCVET and IIT Madras-accredited program is available in nine Indian languages, specifically designed to break language barriers and democratize AI education, particularly for rural populations. The initiative encourages colleges to establish "AI Student Chapters" as part of the national "AI for All: The Future Begins Here" campaign to foster innovation through workshops, hackathons, and collaborative projects.

This exemplifies what Hirschman called a linkage development strategy - creating economic connections through an area of comparative advantage. It's similar to how South Korea used targeted educational investments in semiconductor engineering during the 1980s to enter a high-barrier industry.

But India has a history of ambitious policy announcements that face significant implementation challenges.

So, the key metric of success won’t be the volume of policy pronouncements–an indicator of bureaucratic output, not distributed progress; it will be the wage premium for AI-skilled workers from tier-two and tier-three Indian universities. If this premium rises, it indicates that India's investments are genuinely building human capital, not just producing credentials.

India's case illustrates a critical tension facing universities globally: the pressure to serve national strategic interests while simultaneously maintaining academic independence. This tension isn't unique to India—it's the central dilemma facing universities worldwide as AI policy reshapes their institutional purpose. And this brings us to a more fundamental question:

What happens when universities can no longer maintain their traditional position of institutional ambiguity?

Breaking Apart? When Universities Are Forced to Choose Sides

What happens when universities can no longer maintain their traditional position of institutional ambiguity?

Universities have always been strange institutions.

They have historically existed in a state of ontological liminality—simultaneously serving public and private interests, national and global priorities, and practical and theoretical knowledge creation.

This ambiguity wasn't a bug but a feature, allowing them to serve multiple stakeholders while maintaining their essential character.

National AI policies compel universities to collapse into more defined states that serve specific strategic functions in emerging AI ecosystems.

Countries increasingly view AI as a strategic technology, similar to nuclear power in the 1950s. They're pressuring universities to align with national priorities through funding mechanisms, research restrictions, and explicit policy directives.

This represents not just a policy shift but a fundamental redefinition of what a university is.

The economics of this situation create a classic coordination problem:

Any individual university faces strong incentives to align with its government's AI priorities. MIT and CalTech risk losing millions in NSF research grants if they stray from U.S. priorities, and Tsinghua faces even steeper penalties in China.

But, when institutions align around narrow national interests, global science fragments into silos, leading to duplicated work and lost opportunities for collaboration. No single university can break this cycle; a lone effort to maintain open global collaboration would leave it at a competitive disadvantage.

The end result resembles what game theorists call a suboptimal equilibrium.

Everyone is following what seems best for themselves, but when every university chases the same goals, the overall outcome is worse—like a traffic jam where each driver takes the shortest route, creating congestion that slows everyone down.

And that’s the result of the slow collapse of incentives in higher education.

The Collapse of Incentives: When Individual Rationality Undermines Collective Progress

We often speak of universities as if they were unified actors, like individuals making choices. In reality, they are shifting coalitions responding to different incentives—many of which now actively undermine their capacity to serve as stewards of scientific progress.

The collapse of incentives refers not merely to transient misalignments but to the systemic disintegration of the reward architectures that once motivated research as a global public good. What remains is a fragmented landscape where individual institutions, researchers, and nations prioritize choices that maximize immediate gains—even when those choices corrode long-term collective progress or amplify existential risks.

Global university rankings have become a powerful incentive. To improve their positions, institutions increasingly focus on metrics like citations and other quantifiable outputs. But this pressure can lead to a narrow pursuit of these metrics over high-risk research that might address pressing societal challenges or drive lasting progress.

The consequences for AI—a field where technological primacy may determine geopolitical dominance—are particularly dire.

Several issues plague the current system:

Funding Priorities

First, funding and institutional incentives favor the development of powerful, general-purpose AI systems—attracting billions in venture capital—while safety and alignment research, along with domain-specific applications such as climate modeling and drug discovery, largely rely on philanthropic grants in the tens of millions.

Publication Delays

Second, the traditional academic publication process has become anachronistic in the age of technological acceleration. With companies like OpenAI releasing new models every few months, waiting 12-18 months for peer review pushes cutting-edge work out of universities and into private labs—where the incentives align even less with the public good.

Financial Pressures

Third, financial pressures have warped priorities into a zero-sum game. When China offers unlimited military AI funding, and U.S. tech firms outspend the NSF by orders of magnitude. This monetization extends beyond salaries. As nations like the UAE and Saudi Arabia build domestic AI infrastructure, they lure scholars with promises of unregulated compute access. For a generation of researchers raised on the Silicon Valley ethos of “move fast and break things,” these offers prove irresistible—even as they further Balkanize global AI governance.

Universities once mitigated such pressures through tenure, academic freedom, and open science norms. Today, those mechanisms are overwhelmed.

Tenure-track positions have become rare, replaced by soft-money roles dependent on corporate grants.

Academic freedom erodes as researchers self-censor to preserve industry partnerships or comply with export-controlled collaborations.

Open science, meanwhile, collides with national security imperatives: the same compute restrictions meant to contain China also prevent U.S. labs from sharing code with South African colleagues, fragmenting the research commons.

The real issue isn't which country "wins" at AI—it's whether any institutional framework can reconcile individual incentives with humanity's collective needs. So far, the evidence suggests a troubling trajectory. But is this assessment too pessimistic? Is what we perceive as fragmentation actually something else entirely?

While these fractured incentives pose severe challenges, they prompt us to reconsider our fundamental assumptions. What if what appears as dangerous fragmentation from a Western perspective is actually a necessary transition toward a more diverse and resilient global knowledge ecosystem?

But Is Fragmentation a Problem?

The Western narrative often frames the emerging multipolar AI landscape as problematic fragmentation. But this perspective itself may reflect a particular geopolitical bias. What if this diversification represents not breakdown but breakthrough—a necessary evolution toward a more resilient global knowledge ecosystem?

Fragmentation arises when actors who might gain from collective coordination fail to secure or maintain cooperation. Consequently, what could have evolved into a unified, effective strategy instead disintegrates into isolated, self-interested maneuvers. In the case of AI, fragmentation risks isolating research, duplicating efforts, and blocking the synergistic gains of international collaboration.

Yet, what if global fragmentation isn’t the problem it’s made out to be?

Instead, what if it signals a shift toward a new, more diverse, and resilient equilibrium in global science?

Consider the benefits of allowing different systems to chart their own courses—making distinct errors and uncovering complementary insights. Isn’t this preferable to a system dominated by a few superpowers? The diversity of approaches can be a strength.

For example, Canada’s blend of robust public investment and ethical guidelines, Israel’s efficient military-to-commercial AI pipeline, and Singapore’s regulatory sandbox each serve as localized experiments with breakthrough potential. Similarly, middle powers like Turkey, Indonesia, Vietnam, Brazil, and India are not merely choosing sides between the United States and China; they’re forging “coalitions of the willing” that blend diverse strategies while preserving their autonomy.

Perhaps what appears as dangerous fragmentation from a Western perspective is actually the natural evolution toward a more distributed innovation system—one where diverse approaches compete and collaborate in a dynamic equilibrium. While the US remains a leader, power is shifting toward regional hubs with their own priorities, values, and approaches. Nations across Asia, Europe, and beyond are building interconnected networks and embracing open-source AI models that challenge the traditional narrative of Western technological hegemony.

Drawing on Joel Mokyr’s insights from Cultures of Growth, we know that a nation’s ability to innovate depends on its cultural and institutional openness to experimentation. Middle-power countries, unburdened by the inertia of long-established power structures, may be better positioned to adapt to disruptive technologies like AI. Their agility and willingness to experiment foster dynamic environments where diverse models interact, challenge old paradigms, and ultimately drive global progress beyond traditional US-led hegemony.

Evidence of this exists in the recent report from the World Bank, Who on Earth Is Using Generative AI?, which shows that middle-income countries now drive more than 50% of global AI traffic, outpacing high-income nations in adoption relative to their GDP and infrastructure.

This more optimistic interpretation doesn't negate the real challenges of coordination and cooperation in AI development, but it suggests that what appears as dysfunction from one perspective might be adaptive diversity from another.

Whether we view this emerging landscape as problematic fragmentation or healthy diversification, the multipolar reality is here to stay. And it raises a fundamental question: which essential characteristics of universities will survive this transformation?

The answer isn't found in nostalgic appeals to tradition but in universities' ability to evolve while preserving their core functions: maintaining critical distance from immediate political pressures, fostering intellectual pluralism across ideological divides, and engaging in genuinely long-term thinking that transcends election cycles and quarterly earnings reports. All these functions are increasingly at risk in an era where knowledge itself has become a strategic asset in global competition.

Values and Alliances in a Contested World

Discussions of university transformation often separate "normative" questions (what universities should be) from "positive" questions (how they are changing). This distinction ultimately fails to capture the reality of institutional transformation. As Hilary Putnam argued, fact and value are deeply entangled—even seemingly objective analyses reflect normative assumptions.

This entanglement is clear in how universities are being positioned in national AI policy strategies.

One surprising finding from our analysis is evidence of the emergence of strategic education blocs (SEBs) – alliances of nations that strategically coordinate educational exchange to advance shared technological capabilities while protecting national security interests.

Different political blocs embed distinct values into their AI strategies:

Russia prioritizes sovereignty, subordinating universal ethics to national interests while maintaining tight state control.

India’s "#AIforAll" vision casts AI as a tool for inclusive development, emphasizing accessibility and economic leapfrogging.

The UK aims for a "pro-innovation regulatory environment," balancing openness with security concerns.

China seeks alignment with "core socialist values" and national interests; and

The EU aims to facilitate international convergence" while promoting European values.

Non-state actors bring further complexity: UNESCO promotes AI as a global public good, seeking to bridge technological divides through knowledge sharing. Big Tech companies push a "responsible AI" narrative, blending ethics with commercial interests and advocating for global interoperability.

These competing frameworks highlight how AI—and the knowledge institutions shaping it—are deeply embedded in broader ideological battles. Universities must navigate this landscape without losing their essential role as independent centers of inquiry.

What emerges from this analysis is a picture of universities not merely as passive recipients of policy directives but as global actors in reshaping the international knowledge order—sometimes reinforcing existing power structures, sometimes challenging them, but always navigating complex trade-offs between competing values and interests.

Universities in Flux: Adaptation and Resistance in the New Knowledge Order

The transformations we've traced—from India's educational gambit to the emergence of strategic education blocs—converge toward a fundamental question: what will universities become as they adapt to these unprecedented pressures, and what essential functions might be lost in this evolution?

For centuries, universities have adapted to shifting political and technological landscapes. What we are seeing today is not their demise but a deep transformation in their relationship to knowledge, power, and economic value.

The university of the future will need to be more agile, more specialized, and more transparent about the value systems it upholds. This isn’t a decline—it’s the next phase in the evolution of one of humanity’s most enduring institutions.

What matters now is recognizing what’s at stake. The fragmentation of global knowledge networks is a double-edged sword: while diverse approaches may spur innovation, they also undermine our collective ability to address shared challenges. In a non-cooperative game, fragmentation might yield short-term leadership benefits, but over the long term, it risks precipitating the collapse of the knowledge economy.

The decisions university leaders, researchers, policymakers, and citizens make today will echo for generations. Whether universities retain their distinctive role as critical, independent voices or become fully instrumentalized as tools of national competition will determine not just how AI develops, but who benefits from it, who controls it, and ultimately, what kind of societies it helps create.

The true challenge isn't simply technological but institutional: can we design governance systems that permit universities to serve national interests while preserving their essential role as spaces for independent thought? The answer remains uncertain, but the stakes could hardly be higher.

What do you think?

Distributed Progress is an experiment in slow, deliberative thinking about the transformative technologies reshaping our world.

+1s: Which points resonated with you, and why?

Gaps: What am I missing? Undervalue? Overvalue?

Share: If you found this essay useful, please share it so more people can join the conversation on the Distributed Progress Substack.

This article draws from our systematic analysis of AI policy frameworks across eight major global actors, examining over 1,800 policy documents. Our research quantifies how universities are instrumentalized across governance contexts and documents the emergence of value-aligned strategic education blocs (SEBs) alongside traditional international academic networks.

Want to read our full analysis?

Download the pre-print of our full article at:

SSRN: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5173189

SocArXiv: https://doi.org/10.31235/osf.io/a42rs_v1

For more in-depth analysis from our research, read our article in the latest issue of International Higher Education: “National AI Security Strategies: Impacts on Universities.”